The global target of 1.5 C is now becoming a major focus for every industry, enacting strict corrections to reduce the carbon footprint across the value chain. Especially when it comes to process-manufacturing space (oil and gas, metals/mining, chemicals, pharma), we have reached to an alarming situation, where control of the emissions and optimization of the resource consumption is a must to have a thing. (Where every small step towards betterment can help us in achieving the global target of 1.5 C)

Energy being the essential part of science, which is directly associated with carbon release has been one of the major candidates for its rationale conservation. Energy, which by nature is always being conserved, is also being transmitted from one localized region to another, in which case the transmission happens from the localized factory place to the atmosphere in the form of majorly CO2 and heat (Leading to increased entropy in the chain of the ecosystem). It is estimated that 1.5 C is almost equivalent to 0.5 Gigatonnes of CO2, where limiting to 1.5 C is only possible through a reduction of emissions by ~45% emissions. Based on certain studies, it has been estimated that for every kWh of electricity produced, there is 0.9-0.95 Kg of CO2 being emitted. Of course, the estimations are limited by the source of energy considered for the power generation; whether it is coal, Natural gas, or Petroleum.

Recently, there have been multiple developments that have been helping organizations to calculate and project the CO2 footprint based on their daily movements, production and resources, and energy utilization. This helps them to identify the potential Carbon Footprint which can be controlled and areas of improvement.

Digitalization has shown the potential to not just track the CO2eq, but also to optimize and control the carbon release. Sub-optimal operations leading to more than required fossil fuel, and electricity consumption could be one of the targets to control the emissions.

In this article, we will see how the modern optimization techniques based on ANN can help the organization reduce the carbon footprint in real time. Neural networks have been one of the most applied techniques (algorithm) of the decade with multiple of applications, right from image analytics to speech recognition to deep learning-based applications. Now everyone is also trying this out in the space of sensor-based analytics, where every decision on the shop floor is being made based on the experience of the engineers/operators who had spent their entire life operating the complex processes. Even with so much of experience, the dynamics of nature, processes, and people make it difficult at times to ensure retention at steady levels of optimal operations. The ever-changing market dynamics; quality of fuels, raw materials, upstream operations, and downstream operations all being interlinked creating a colossal matrix of complexity that is difficult to decrypt and serialize.

Framework for the Optimization

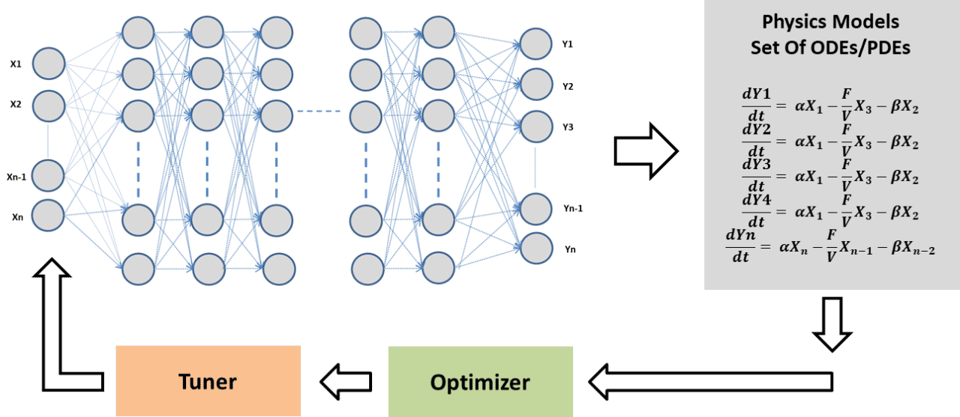

A typical framework would include the dynamic considerations which are susceptible to change based on un-noticed scenarios. The impact of these scenarios on product quality, and the energy consumption is difficult to estimate if not monitored or measured in real-time. The Framework consists of the following essential components:

1. Neural Networks (or any other equivalent model)

2. Physics-based constraints

3. Prediction & Recommendation system

Most of us would be familiar with the working behavior of neural nets, but it's apparently more interesting when the layers of 1st principles are added as the constraint on top of it. The learnings from the past are usually misconceived by such models, even after loads of training and validation. To which, 1st principle tries to map the physics and rectify the misleading prediction which could have not been possible in practical scenarios.

Looking at the error margin of the prediction over the subsequent predictions, which are the outcomes of the mechanistic constraints, recorrects its learning to get closer and closer to the actual outcomes. Usually, these constraints are the known dynamics of the system which can never get violated. In many cases, this kind of architecture has been utilized to estimate the unknown parameters from this set of equations.

How this enables the reduction of Carbon Footprint?

Many of you must be thinking about how it really helps in reducing the carbon footprint.

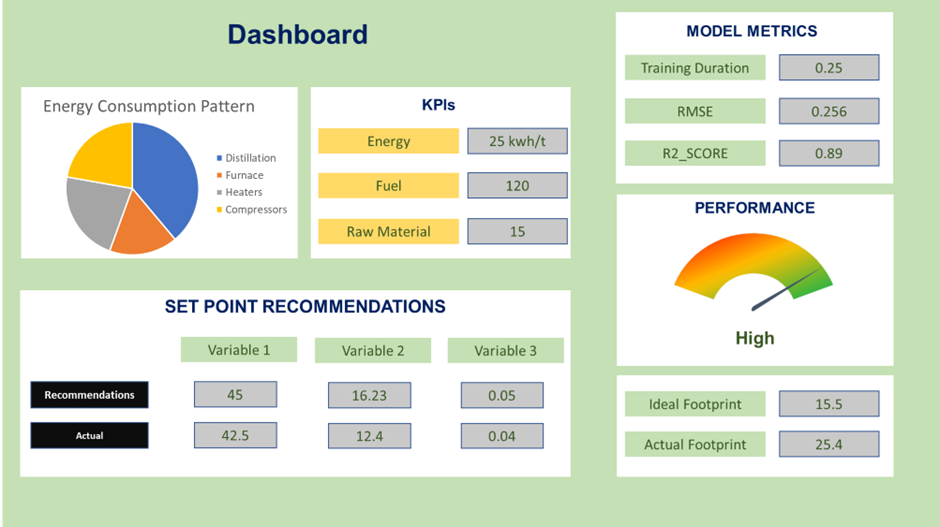

The idea is pretty simple - the 1st principle which is the abstraction of the stoichiometric demand of the energy and various other resources counts the threshold of the deviation from the optimal level. This deviation is the estimate of the vacuum that could have been negated if it would have been the ideal scenario. To ensure that the optimal levels are met, the framework keeps on running the recommendations in real time and prescribes the set points to be fed to the controller. The framework keeps analyzing the actual carbon footprint v/s the ideal one, which in a way keeps the organization aware of the potential demand and scope for improvement.

If you are interested to know more and understand how this framework can be helpful for your industry, please reach out to us at: analytics@tridiagonal.com.

Written by

Parth Prasoon Sinha

Principal Engineer - Analytics

Have a Question?

If you need assistance beyond what is provided above, please contact us.